A human-computer collaborative workflow for the acquisition and analysis of terrestrial insect movement in behavioral field studies

August 1, 2013

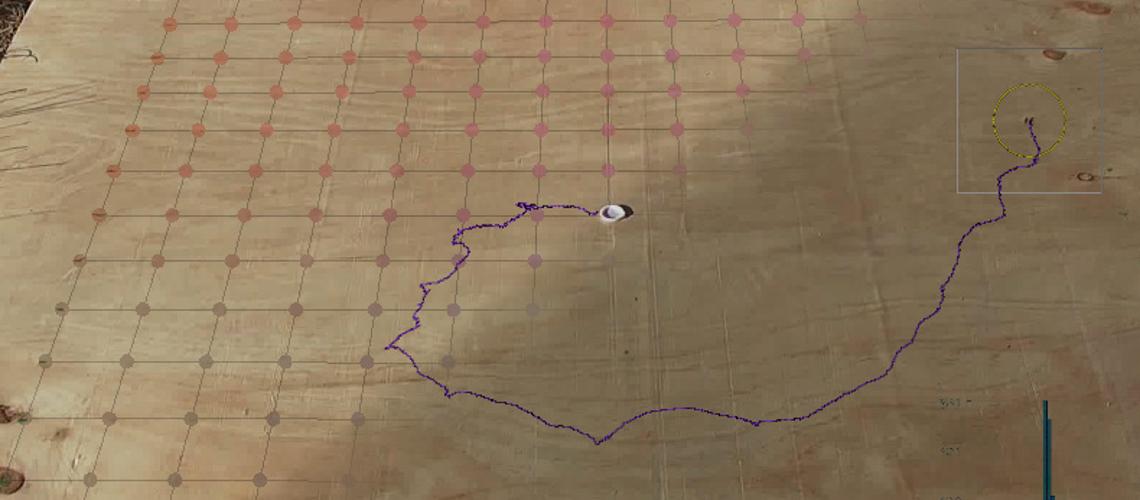

The study of insect behavior from video sequences poses many challenges. Despite the advances in image processing techniques, the current generation of insect tracking tools is only effective in controlled lab environments and under ideal lighting conditions. Very few tools are capable of tracking insects in outdoor environments where the insects normally operate. Furthermore, the majority of tools focus on the first stage of the analysis workflow, namely the acquisition of movement trajectories from video sequences. Far less effort has gone into developing specialized techniques to characterize insect movement patterns once acquired from videos. In this paper, we present a human-computer collaborative workflow for the acquisition and analysis of insect behavior from field-recorded videos. We employ a human-guided video processing method to identify and track insects from noisy videos with dynamic lighting conditions and unpredictable visual scenes, improving tracking precision by 20% to 44% compared to traditional automated methods. The workflow also incorporates a novel visualization tool for the large-scale exploratory analysis of insect trajectories. We also provide a number of quantitative methods for statistical hypothesis testing. Together, the various components of the workflow provide end-to-end quantitative and qualitative methods for the study of insect behavior from field-recorded videos. We demonstrate the effectiveness of the proposed workflow with a field study on the navigational strategies of Kenyan seed harvester ants.